Agenda

The workshop takes place on Monday, Nov 13, 2017 from 9:00 a.m.-5:30 p.m. in room 712. Details about all talks and the speakers can be found by clicking on a title in the agenda or below the agenda.

|

Dear attendees: Please take a moment to provide feedback about your experience of the WACCPD workshop! |

| 9:00 – 9:15 | Opening Remarks – Sunita Chandrasekaran & Guido Juckeland |

| 9:15 – 10:00 | Keynote: John E. Stone (UIUC) – Using Accelerator Directives to Adapt Science Applications for State-of-the-Art HPC Architectures |

| 10:00 – 10:30 | Coffee Break |

| Session 1: Applications (Chair: Adrian Jackson, EPCC) | |

| 10:30 – 11:00 | Pi-Yueh Chuang (George Washington University, USA) – An Example of Porting PETSc Applications to Heterogeneous Platforms with OpenACC |

| 11:00 – 11:30 | Michel Müller (University of Tokyo, Japan) – Hybrid Fortran: High Productivity GPU Porting Framework Applied to Japanese Weather Prediction Model |

| 11:30 – 12:00 | Takuma Yamaguchi, Kohei Fujita (University of Tokyo, Japan) – Implicit Low Order Unstructured Finite-Element Multiple Simulation Enhanced by Dense Computation using OpenACC |

| 12:00 – 1:00 p.m. | Lunch |

| 1:00 – 1:30 | Invited Talk: Randy Allen (Mentor Graphics) – The Challenges Faced by OpenACC Compilers |

| Session 2: Runtime Environments (Chair: Jeff Larkin, NVIDIA) | |

| 1:30 – 2:00 | William Killian (University of Delaware, USA) – The Design and Implementation of OpenMP 4.5 and OpenACC Backends for the Raja C++ Performance Portability Layer |

| 2:00 – 2:30 | Francesc-Josep Lordan Gomis (BSC, Spain) – Enabling GPU support for the COMPSs-Mobile framework |

| 2:30 – 3:00 | Christopher Stone (Computational Science & Engineering, LLC, USA) – Concurrent parallel processing on Graphics and Multicore Processors with OpenACC and OpenMP |

| 3:00 – 3:30 | Coffee Break |

| Session 3: Program Evaluation (Chair: Guido Juckeland, HZDR) | |

| 3:30 – 4:00 | Akihiro Hayashi (Rice University, USA) – Exploration of Supervised Machine Learning Techniques for Runtime Selection of CPU vs. GPU Execution in Java Programs |

| 4:00 – 4:30 | Khalid Ahmad (University of Utah, USA) – Automatic Testing of OpenACC Applications |

| 4:30 – 5:00 | Christian Terboven (RWTH Aachen University, Germany) – Evaluation of Asynchronous Offloading Capabilities of Accelerator Programming Models for Multiple Devices |

| 5:00 – 5:15 | Closing and Best Paper Award |

Keynote: Using Accelerator Directives to Adapt Science Applications for State-of-the-Art HPC Architectures

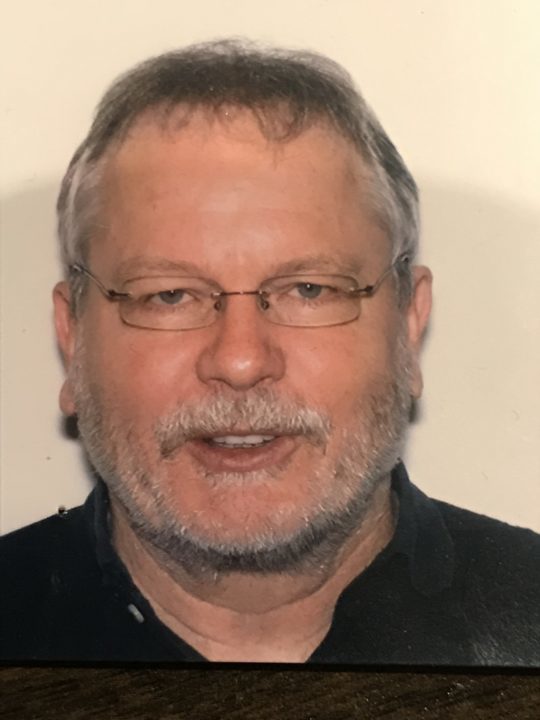

John E. Stone

The keynote of the workshop will be presented by John E. Stone, Senior Research Programmer, Theoretical and Computational Biophysics Group and NIH Center for Macromolecular Modeling and Bioinformatics at the University of Illinois at Urbana-Champaign.

John Stone is the lead developer of VMD, a high performance molecular visualization tool used by researchers all over the world. His research interests include molecular visualization, GPU computing, parallel computing, ray tracing, haptics, virtual environments, and immersive visualization. Mr. Stone was inducted as an NVIDIA CUDA Fellow in 2010. In 2015 Mr. Stone joined the Khronos Group Advisory Panel for the Vulkan Graphics API. In 2017 Mr. Stone was awarded as an IBM Champion for Power for innovative thought leadership in the technical community. He also provides consulting services for projects involving computer graphics, GPU computing, and high performance computing.

Abstract

The tremendous early success of accelerated HPC systems has been due to their ability to reach computational key performance targets with high energy efficiency, and without placing undue burden on the developers of HPC applications software. Upcoming accelerated systems are bringing tremendous performance levels and technical capabilities, but fully exploiting these advances requires science applications to incorporate fine-grained parallelism pervasively, even deep within application code that historically was not considered performance-critical.

Accelerator directives provide a critical high-productivity path to resolving this requirement, even within applications that already make extensive use of hand-coded accelerator kernels. This talk will explore the role of accelerator directives and their complementarity to both hand-coded kernels and new hardware features to maintain high development productivity and performance for production scientific applications. The talk will also consider some potential challenges posed by both both mundane and disruptive hardware evolution in future HPC systems and how they might relate to directive-based accelerator programming approaches.

An Example of Porting PETSc Applications to Heterogeneous Platforms with OpenACC

Pi-Yueh Chuang

Pi-Yueh Chuang is presenting this paper by Pi-Yueh Chuang (The George Washington University, USA) and Fernanda Foertter (Oak Ridge National Lab, USA).

Pi-Yueh Chuang is a Ph.D. student in Mechanical Engineering Department at the George Washington University. His current research interests include computational fluid dynamics and high-order numerical methods. As an intern at Oak Ridge National Laboratory in 2017 summer, he worked on developing OpenACC documentation and examples. His prior research experience includes numerical methods for nanoscale energy transport and for plastic injection molding.

Abstract

In this paper, we document the workflow of our practice to port a PETSc application with OpenACC to a supercomputer, Titan, at Oak Ridge National Laboratory. Our experience shows a few lines of code modifications with OpenACC directives can give us a speedup of 1.34x in a PETSc-based Poisson solver (conjugate gradient method with algebraic multigrid preconditioner). This demonstrates the feasibility of enabling GPU capability in PETSc with OpenACC. We hope our work can serve as a reference to those who are interested in porting their legacy PETSc applications to modern heterogeneous platforms.

Hybrid Fortran: High Productivity GPU Porting Framework Applied to Japanese Weather Prediction Model

Michel Müller

Michel Müller is presenting this paper. Authors: Michel Müller (The University of Tokyo, Japan) and Takayuki Aoki (Tokyo Institute of Technology, Japan).

Michel Müller is a Ph.D. student at the Tokyo Institute of Technology. He graduated from the Master’s course in Electrical Engineering and Information Technology at ETH Zurich in 2012. The first contact with GPGPU programming was for a semester thesis regarding the feasibility of GPUs for the COSMO weather model in 2011. During and after his ETH studies he worked in the industry as a software architect at ATEGRA AG Switzerland. His interest in GPUs eventually lead him to Tokyo where he became interested in productivity issues in HPC software as a guest researcher at Aoki Laboratory. Since 2015 Michel is pursuing a Ph.D. course on the topic of applying GPUs to the Japanese weather model ”ASUCA” while retaining the original user code as much as possible.

Abstract

In this work we use the GPU porting task for the operative Japanese weather prediction model “ASUCA” as an opportunity to examine productivity issues with OpenACC when applied to structured grid problems. We then propose “Hybrid Fortran”, an approach that combines the advantages of directive based methods (no rewrite of existing code necessary) with that of stencil DSLs (memory layout is abstracted). This gives the ability to define multiple parallelizations with different granularities in the same code. Without compromising on performance, this approach enables a major reduction in the code changes required to achieve a hybrid GPU/CPU parallelization – as demonstrated with our ASUCA implementation with Hybrid Fortran.

Implicit Low-Order Unstructured Finite-Element Multiple Simulation Enhanced by Dense Computation using OpenACC

Takuma Yamaguchi

Takuma Yamaguchi, Kohei Fujita are presenting this paper. Authors: Takuma Yamaguchi, Kohei Fujita, Tsuyoshi Ichimura, Muneo Hori, Maddegedara Lalith and Kengo Nakajima (all from the University of Tokyo, Japan).

Takuma Yamaguchi is a ph.D. student in the Department of Civil Engineering at the University of Tokyo and he has B.E. and M.E., from the University of Tokyo. His research is high-performance computing targeting at earthquake simulation. More specifically, his work performs fast crustal deformation computation for multiple computation enhanced by GPUs.

Kohei Fujita is an assistant professor at the Department of Civil Engineering at the University of Tokyo. He received his Dr. Eng. from the Department of Civil Engineering, University of Tokyo in 2014. His research interest is development of high-performance computing methods for earthquake engineering problems.

Kohei Fujita

He is a coauthor of SC14 and SC15 Gordon Bell Prize Finalist Papers on large-scale implicit unstructured finite-element earthquake simulations.

Abstract

In this paper, we develop a low-order three-dimensional finite-element solver for fast multiple-case crust deformation analysis on GPU-based systems. Based on a high-performance solver designed for massively parallel CPU based systems, we modify the algorithm to reduce random data access, and then insert OpenACC directives. The developed solver on ten Reedbush-Hnodes (20 P100 GPUs) attained speedup of 14.2 times from 20 K computer nodes, which is high considering the peak memory bandwidth ratio of 11.4 between the two systems. On the newest Volta generation V100 GPUs, the solver attained a further 2.45 times speedup from P100 GPUs. As a demonstrative example, we computed 368 cases of crustal deformation analyses of northeast Japan with 400 million degrees of freedom. The total procedure of algorithm modification and porting implementation took only two weeks; we can see that high performance improvement was achieved with low development cost. With the developed solver, we can expect improvement in reliability of crust-deformation analyses by many-case analyses on a wide range of GPU-based systems.

The Challenges Faced by OpenACC Compilers

Randy Allen

Randy Allen is director of advanced research in the Embedded Systems Division of Mentor Graphics. His career has spanned research, advanced development, and start-up efforts centered around optimizing application performance. Dr. Allen has consulted on or directly contributed to the devel- opment of most HPC compiler efforts. He was the founder of Catalytic, Inc. (focused on compilation of MATLAB for DSPs), as well as a cofounder of Forte Design Systems (high-level synthesis). He has authored or coauthored more than 30 papers on compilers for high-performance computing, simulation, high-level synthesis, and compiler optimization, and he coauthored the book Optimizing Compilers for Modern Architectures. Dr. Allen earned his AB summa cum laude in chemistry from Harvard University, and his PhD in mathemati- cal sciences from Rice University.

Abstract

It is easy to assume that a directive-based system such as OpenACC solves all the problems faced by compiler-developers for high performance architectures. After all, the hard part of optimizing compilers for high performance architectures is figuring out what parts of the application to do in parallel, and OpenACC directives serve up that information to the compiler up on a platter. What more is left for the compiler writer to do but to lay back, collect paychecks, and watch application developers do all of his/her work?

Unfortunately (or fortunately if you are employed as a compiler developer), the world is not so simple. While OpenACC directives definitely simplify development of some parts of optimizing compilers, they introduce a new set of challenges. Just because a user has said a loop can be done in parallel does not mean that the compiler automatically knows how to schedule it in parallel. For that matter, is the user actually applying intelligence when inserting directives, or is she/he instead randomly inserting directives to see what runs faster? The latter occurs far more often than many people suspect.

This talk briefly overviews the challenges faced by a compiler that targets high performance architectures and the assistance in meeting those challenges provided by a directive-based system like OpenACC. It will then present the challenges introduced to the compiler by directive-based systems, with the intent of steering application developers toward an understanding of how to assist compilers in overcoming the challenges and obtaining supercomputer performance on applications without investing superhuman effort.

The Design and Implementation of OpenMP 4.5 and OpenACC Backends for the RAJA C++ Performance Portability Layer

William Killian

William Killian is presenting this paper. Authors: William Killian (University of Delaware, USA), Tom Scogland, Adam Kunen (both from Lawrence Livermore National Laboratory, USA) and John Cavazos (Institute for Financial Services Analytics, USA).

William Killian is a Ph.D. candidate at the University of Delaware and an Assistant Professor at Millersville University. His research area includes predictive modeling, programming models, and program optimization. Prior to joining Millersville, Mr. Killian has worked at Intel Corporation and Lawrence Livermore National Laboratory (LLNL), where he focused on application fitness, optimization, and performance modeling. William continues to collaborate with LLNL on abstraction layers which make it easier for users to create scientific applications which run on upcoming supercomputing systems. William earned his B.S. from Millersville University (’11) and M.S. from the University of Delaware (’13) and expects to complete his Ph.D in early 2018.

Abstract

Portability abstraction layers such as RAJA enable users to quickly change how a loop nest is executed with minimal modifications to high-level source code. Directive-based programming models such as OpenMP and OpenACC provide easy-to-use annotations on for-loops and regions which change the execution pattern of user code. Directive-based language backends for RAJA have previously been limited to few options due to multiplicative clauses creating version explosion. In this work, we introduce an updated implementation of two directive-based backends which helps mitigate the aforementioned version explosion problem by leveraging the C++ type system and template meta-programming concepts. We implement partial OpenMP 4.5 and OpenACC backends for the RAJA portability layer which can apply loop transformations and specify how loops should be executed. We evaluate our approach by analyzing compilation and runtime overhead for both backends using PGI 17.7 and IBM clang (OpenMP 4.5) on a collection of computation kernels.

Enabling GPU support for the COMPSs-Mobile framework

Francesc-Josep Lordan Gomis

Francesc-Josep Lordan Gomis is presenting this paper. Authors: Francesc-Josep Lordan Gomis, Rosa M. Badia (both from Barcelona Supercomputing Center, Spain) and Wen-Mei Hwu (University of Illinois, Urbana-Champaign, USA).

Francesc Lordan was born in Barcelona, Spain, in 1987. He received the B.E. degree in informatics engineering from the Universitat Politècnica de Catalunya, Barcelona, Spain, in 2010, and the M.Tech. Degree in computer architecture, network and systems from the Universitat Politècnica de Catalunya (UPC), Barcelona, Spain, in 2013. Since February 2010, he has been part of the Workflows and Distributed Computing group of the Barcelona Supercomputing Center (BSC) and developing extensions of the COMPSs programming model to support the execution on Cloud environments. Currently, he is working on his PhD thesis, advised by Rosa M. Badia, studying the problems of distributed programming for mobile cloud computing.

Abstract

Using the GPUs embedded on mobile devices allows for increasing the performance of the applications running on them while reducing the energy consumption of their execution. This article presents a task-based solution for adaptative, collaborative heterogeneous computing on mobile cloud environments. To implement our proposal, we extend the COMPSs-Mobile framework — an implementation of the COMPSs programming model for building mobile applications that offload part of the computation to the Cloud — to support offloading computation to GPUs through OpenCL. To evaluate the behavior of our solution, we subject the prototype to three benchmark applications representing different application patterns.

Concurrent parallel processing on Graphics and Multicore Processors with OpenACC and OpenMP

Christopher Stone is presenting this paper from Christopher Stone (Computational Science & Engineering, LLC, USA), Roger Davis and Daryl Lee (both from University of California Davis, USA).

Dr. Christopher P. Stone is a software development and consultant in the areas of high-performance computing and computational science. He earned his BS in Physics from Wofford College in 1998 and his PhD in Aerospace Engineering from the Georgia Institute of Technology in 2003. Since then, he has held several research and academic positions including visiting assistant professor and research scientist/engineer. In 2007, he founded Computational Science & Engineering, LLC, a private scientific research and development firm working mostly on federally funded R&D projects in the areas of high-performance/parallel computing with computational science applications. Dr. Stone has been involved with the US DoD High Performance Modernization Office (HPCMP) Productivity Enhancement, Technology, Transfer, and Training (PETTT) program as a consultant since 2012. In this capacity, Dr. Stone provides assistance to user and application developers in performance optimization, algorithm refactoring, and transitioning to next-gen parallel architectures. Dr. Stone’s research interests include many-core parallel processing on hardware accelerators (e.g., graphics processing units) and vector-friendly algorithms for computationally intensive applications, particularly for computational fluid dynamics (CFD) and combustion modeling applications.

Abstract

Hierarchical parallel computing is rapidly becoming ubiquitous in high performance computing (HPC) systems. Programming models used commonly in turbomachinery and other engineering simulation codes have traditionally relied upon distributed memory parallelism with MPI and have ignored thread and data parallelism. This paper presents methods for programming multi-block codes for concurrent computational on host multicore CPUs and many-core accelerators such as graphics processing units. Portable and standardized language directives are used to expose data and thread parallelism within the hybrid shared- and distributed-memory simulation system. A single-source, multiple-object strategy is used to simplify code management and allow for heterogeneous computing. Automated load balancing is implemented to determine what portions of the domain are computed by the multicore CPUs and GPUs. Benchmark results show that significant parallel speed-up is attainable on multicore CPUs and many-core devices such as the Intel Xeon Phi Knights Landing using OpenMP SIMD and thread parallel directives. Modest speed-up, relative to a CPU core, was achieved with OpenACC offloading to NVIDIA GPUs. Combining both GPU offloading with multicore host parallelism improved the single-device performance by 30% but further speed-up was not realized when more heterogeneous CPU-GPU device pairs were included.

Exploration of Supervised Machine Learning Techniques for Runtime Selection of CPU vs.GPU Execution in Java Programs

Akihiro Hayashi

Akihiro Hayashi is presenting this paper. Authors: Gloria Kim, Akihiro Hayashi and Vivek Sarkar (all from Rice University, USA).

Dr. Hayashi is a research scientist at Rice university. His research interests include automatic parallelization, programming languages, and compiler optimizations for parallel computer systems.

Abstract

While multi-core CPUs and many-core GPUs are both viable platforms for parallel computing, programming models for them can impose large burdens upon programmers due to their complex and low-level APIs. Since managed languages like Java are designed to be run on multiple platforms, parallel language constructs and APIs such as Java 8 Parallel Stream APIs can enable high-level parallel programming with the promise of performance portability for mainstream (“non-ninja”) programmers. To achieve this goal, it is important for the selection of the hardware device to be automated rather than be specified by the programmer, as is done in current programming models. Due to a variety of factors affecting performance, predicting a preferable device for faster performance of individual kernels remains a difficult problem. While a prior approach makes use of machine learning algorithms to address this challenge, there is no comparable study on good supervised machine learning algorithms and good program features to track. In this paper, we explore 1) program features to be extracted by a compiler and 2) various machine learning techniques that improve accuracy in prediction, thereby improving performance. The results show that an appropriate selection of program features and machine learning algorithms can further improve accuracy. In particular, support vector machines (SVMs), logistic regression, and J48 decision tree are found to be reliable techniques for building accurate prediction models from just two, three, or four program features, achieving accuracies of 99.66\%, 98.63\%, and 98.28\% respectively from 5-fold-cross-validation.

Automatic Testing of OpenACC Applications

Khalid Ahmad

Khalid Ahmad is presenting this paper. Authors: Khalid Ahmad (University of Utah, USA) and Michael Wolfe (NVIDIA Corporation, USA).

Khalid Ahmad is a PhD student in the School of Computing at the University of Utah and he has a M.S., from University of Utah. His current research focuses on enhancing programmer productivity and performance portability by implementing an auto-tuning framework that generates and evaluates different floating point precision variants of a numerical or a scientific application while maintaining data correctness.

Abstract

CAST (Compiler-Assisted Software Testing) is a feature in our compiler and runtime to help users automate testing high performance numerical programs. CAST normally works by running a known working version of a program and saving intermediate results to a reference file, then running a test version of a program and comparing the intermediate results against the reference file. Here, we describe the special case of using CAST on OpenACC programs running on a GPU. Instead of saving and comparing against a saved reference file, the compiler generates code to run each compute region on both the host CPU and the GPU. The values computed on the host and GPU are then compared, using OpenACC data directives and clauses to decide what data to compare.

Evaluation of Asynchronous Offloading Capabilities of Accelerator Programming Models for Multiple Devices

Christian Terboven

Christian Terboven is presenting this paper. Authors: Jonas Hahnfeld, Christian Terboven (both from RWTH Aachen University, Germany), James Price (University of Bristol, UK), Hans Joachim Pflug and Matthias Mueller (both from RWTH Aachen University, Germany).

Dr. Christian Terboven is a senior scientist and leads the HPC group at RWTH Aachen University. His research interests center around Parallel Programming and related Software Engineering aspects. Christian has been involved in the Analysis, Tuning and Parallelization of several large-scale simulation codes for various architectures and is responsible for several research projects in the areas of programming models for and productivity of modern HPC systems. Since 2006, he is a member of the OpenMP Language Committee and leads the Affinity subcommittee.

Abstract

Accelerator devices are increasingly used to build large supercomputers and current installations usually include more than one accelerator per system node. To keep all devices busy, kernels have to be executed concurrently which can be achieved via asynchronous kernel launches. This work compares the performance for an implementation of the Conjugate Gradient method with CUDA, OpenCL, and OpenACC on NVIDIA Pascal GPUs. Furthermore, it takes a look at Intel Xeon Phi coprocessors when programmed with OpenCL and OpenMP. In doing so, it tries to answer the question whether the higher abstraction level of directive based models is inferior to lower level paradigms in terms of performance.