Program Chairs

Rabab Alomairy, Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology, USA

Andreas Herten, Forschungszentrum Jülich, Germany

Jorge Luis Gálvez Vallejo, Australian National University, Australia

General Chairs

Sridutt Bhalachandra, Lawrence Berkeley National Laboratory, USA

Sunita Chandrasekaran, University of Delaware, USA

Guido Juckeland, HZDR, Germany

WACCPD2024 Proceedings are now available

WACCPD 2024 conference proceedings

SC25 Conference

Proposal for WACCPD 2025 has been submitted to SC25, organised in St. Louis, Missouri, 16-21 November 2025.

About the Workshop

The inclusion of accelerators in HPC systems is becoming well-established, and there are many examples of successful deployments of heterogeneous systems today. We expect this trend to continue: accelerators are becoming even more widely used, a larger fraction of the system compute capability will be delivered by accelerators, and there will be an even tighter coupling of components in a compute node. The change in the HPC system landscape, enabled by both the increasing capability and usability of accelerators such as GPUs, ML/AI chips and QPUs, opens new computational possibilities and creates challenges related to algorithm design, portability and standardization of programming models. Technology enablers look for opportunities to further integrate compute components. In the context of GPUs, these efforts include higher bandwidth memory technologies with larger capacities, hardware-managed caches, and the ability to access CPU data directly. As a result, scientific software developers are offered a rich platform to exploit the multiple levels of parallelism in their applications. This year’s workshop will look at bringing together professionals working in the field of accelerated HPC and individuals exploring new accelerator technologies, including quantum computing.

In today’s HPC environment, systems with heterogeneous node architectures providing multiple levels of parallelism are omnipresent. The next generation of systems may feature GPU-like accelerators combined with other accelerators to provide improved performance for a wider variety of application kernels. Examples include ML/AI hardware, FPGAs, Data Flow chips, and QPUs. This introduces further complexity to application programmers because different programming languages and frameworks (e.g CUDA and HIP) may be required for each architectural component in a compute node. This type of specialization complicates maintenance and portability to other systems. Thus, the importance of programming approaches that can provide performance, scalability, and portability while exploiting the maximum available parallelism is increasing. It is highly desirable that programmers are able to keep a single code base to help ease maintenance and avoid the need to debug and optimize multiple versions of the same code. In the context of ML/AI hardware, this includes the use of different precision arithmetic for scientific computations and precision recovery. In the context of quantum computing, the standardization of computing models is extremely important, especially for leveraging existing hybrid programming models and enhancing compiler frameworks to support new hardware.

Exploiting the maximum available parallelism in such systems necessitates refactoring applications and adopting programming approaches that effectively utilize accelerators. Historically, directive-based models like OpenMP offloading and OpenACC were the dominant portable solutions. Today, the landscape has evolved: Since 2021, our workshop has expanded to cover a broader range of approaches, including Fortran/C++ standard language parallelism, SYCL, DPC++, Kokkos, RAJA, as well as task-based and data-centric models like Regent, Legion, and OmpSs. These models not only provide scalable and portable solutions without compromising performance but also play a critical role in hybrid MPI+X programming, where they serve as the “X” component alongside MPI for distributed-memory parallelism. In the context of quantum computing, portability efforts are following a similar trajectory. While well-established languages like Qiskit facilitate inter-quantum portability, newer portable models such as OpenQL are being developed, often leveraging existing task offloading techniques, including OpenMP. Our workshop will explore methods and case studies that demonstrate programmability, performance, and portability across HPC, AI, and quantum computing.

Workshop Important Deadlines

- Submission Deadline:

28 of July 2025, Midnight, AOE1st of August, 2025, Midnight, AOE - Author Notification: 29 of August, 2025,

- Camera Ready Deadline: 22 of September, 2025

Workshop format

WACCPD is a workshop centered on peer-reviewed and published technical papers. The workshop will have one invited talk. Depending on the type of submission talks will be scheduled for 15+5min (Full Paper) and 5+5min (Lightning Talk).

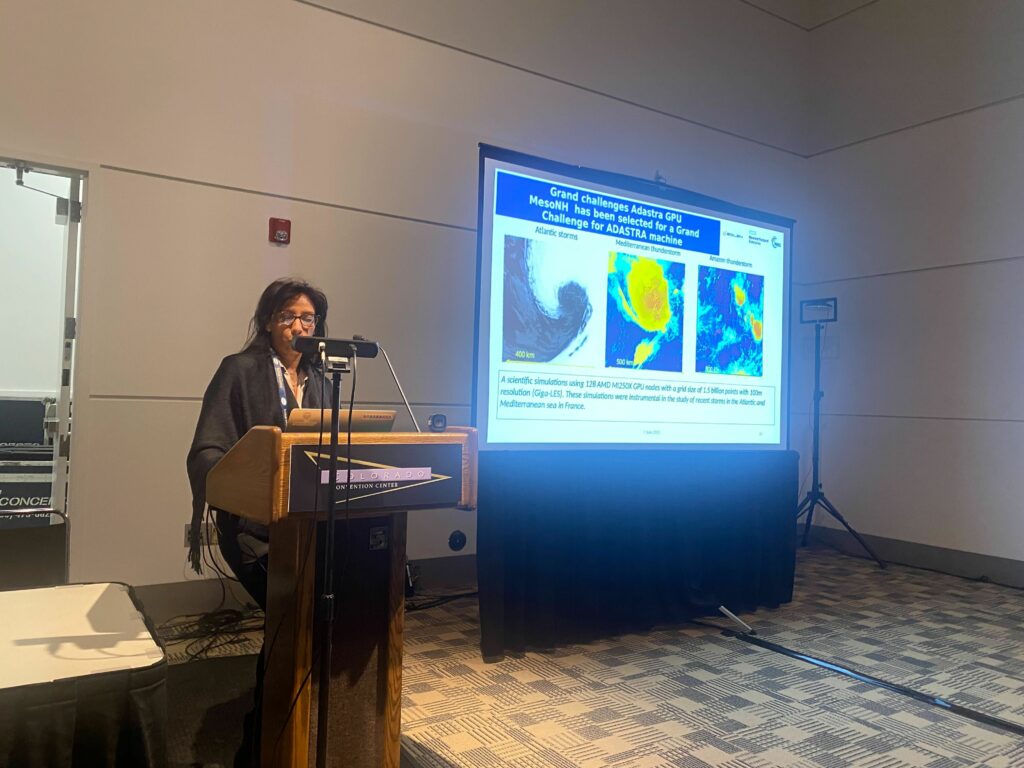

Impressions from previous workshops