The Best Paper(s) of the WACCPD workshop are chosen based on extensive reviews from expert reviewers on the Program Committee. We rank the papers based on review scores and reviewers’ confidence. The best papers demonstrate novel ideas enabling new science, are written with clarity and thorough experimental analysis. Their publication is regarded as instrumental for the field. We also encourage papers that produce open source and reproducible software although that is not a specific qualifying criteria for the best paper award.

2017

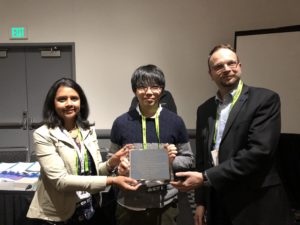

This year featured two papers with perfect scores from all reviewers. We were please to give out two awards.

Implicit Low-Order Unstructured Finite-Element Multiple Simulation Enhanced by Dense Computation using OpenACC

Takuma Yamaguchi

Takuma Yamaguchi, Kohei Fujita are presenting this paper. Authors: Takuma Yamaguchi, Kohei Fujita, Tsuyoshi Ichimura, Muneo Hori, Maddegedara Lalith and Kengo Nakajima (all from the University of Tokyo, Japan).

Takuma Yamaguchi is a ph.D. student in the Department of Civil Engineering at the University of Tokyo and he has B.E. and M.E., from the University of Tokyo. His research is high-performance computing targeting at earthquake simulation. More specifically, his work performs fast crustal deformation computation for multiple computation enhanced by GPUs.

Kohei Fujita is an assistant professor at the Department of Civil Engineering at the University of Tokyo. He received his Dr. Eng. from the Department of Civil Engineering, University of Tokyo in 2014. His research interest is development of high-performance computing methods for earthquake engineering problems.

Kohei Fujita

He is a coauthor of SC14 and SC15 Gordon Bell Prize Finalist Papers on large-scale implicit unstructured finite-element earthquake simulations.

Abstract

In this paper, we develop a low-order three-dimensional finite-element solver for fast multiple-case crust deformation analysis on GPU-based systems. Based on a high-performance solver designed for massively parallel CPU based systems, we modify the algorithm to reduce random data access, and then insert OpenACC directives. The developed solver on ten Reedbush-Hnodes (20 P100 GPUs) attained speedup of 14.2 times from 20 K computer nodes, which is high considering the peak memory bandwidth ratio of 11.4 between the two systems. On the newest Volta generation V100 GPUs, the solver attained a further 2.45 times speedup from P100 GPUs. As a demonstrative example, we computed 368 cases of crustal deformation analyses of northeast Japan with 400 million degrees of freedom. The total procedure of algorithm modification and porting implementation took only two weeks; we can see that high performance improvement was achieved with low development cost. With the developed solver, we can expect improvement in reliability of crust-deformation analyses by many-case analyses on a wide range of GPU-based systems.

Automatic Testing of OpenACC Applications

Khalid Ahmad

Khalid Ahmad is presenting this paper. Authors: Khalid Ahmad (University of Utah, USA) and Michael Wolfe (NVIDIA Corporation, USA).

Khalid Ahmad is a PhD student in the School of Computing at the University of Utah and he has a M.S., from University of Utah. His current research focuses on enhancing programmer productivity and performance portability by implementing an auto-tuning framework that generates and evaluates different floating point precision variants of a numerical or a scientific application while maintaining data correctness.

Abstract

CAST (Compiler-Assisted Software Testing) is a feature in our compiler and runtime to help users automate testing high performance numerical programs. CAST normally works by running a known working version of a program and saving intermediate results to a reference file, then running a test version of a program and comparing the intermediate results against the reference file. Here, we describe the special case of using CAST on OpenACC programs running on a GPU. Instead of saving and comparing against a saved reference file, the compiler generates code to run each compute region on both the host CPU and the GPU. The values computed on the host and GPU are then compared, using OpenACC data directives and clauses to decide what data to compare.

The authors received beautiful plaques and all presenters were given a copy of the new OpenMP and OpenACC books.

- Best Paper #1

- Best Paper #2

- OpenACC Book given to all speakers

- OpenMP Book given to all speakers

2016

Acceleration of Element-by-Element Kernel in Unstructured Implicit Low-order Finite-element Earthquake Simulation using OpenACC on Pascal GPUs

Takuma Yamaguchi

Takuma Yamaguchi and Kohei Fujita are presenting this paper from Kohei Fujita, Takuma Yamaguchi, Tsuyoshi Ichimura (University of Tokyo, Japan), Muneo Hori (University of Tokyo and RIKEN, Japan) and Lalith Maddegedara (University of Tokyo, Japan).

Takuma Yamaguchi is a Master’s student in the Department of Civil Engineering at the University of Tokyo and he has a B.E., from the University of Tokyo. His research is high-performance computing targeting at earthquake simulation. More specifically, his work performs fast crustal deformation computation for multiple computation enhanced by GPUs.

Kohei Fujita is a postdoctoral researcher at Advanced Institute for Computational Science, RIKEN. He received his Dr. Eng. from the Department of Civil Engineering, University of Tokyo in 2014. His research interest is development of high-performance computing methods for earthquake engineering problems. He is a coauthor of SC14 and SC15 Gordon Bell Prize Finalist Papers on large-scale implicit unstructured finite-element earthquake simulations.

Kohei Fujita

Abstract

The element-by-element computation used in matrix-vector multiplications is the key kernel for attaining high-performance in unstructured implicit low-order finite-element earthquake simulations. We accelerate this CPU-based element-by-element kernel by developing suitable algorithms for GPUs and porting to a GPU-CPU heterogeneous compute environment by OpenACC. Other parts of the earthquake simulation code are ported by directly inserting OpenACC directives into the CPU code. This porting approach enables high performance with relatively low development costs. When comparing eight K computer nodes and eight NVIDIA Pascal P100 GPUs, we achieve 23.1 times speedup for the element-by-element kernel, which leads to 16.7 times speedup for the 3 x 3 block Jacobi preconditioned conjugate gradient finite-element solver. We show the effectiveness of the proposed method through many-case crust-deformation simulations on a GPU cluster.

The authors were awarded with an NVIDIA Pascal GPU.

2015

Acceleration of the FINE/Turbo CFD solver in a heterogeneous environment with OpenACC directives

Authors: David Gutzwiller (NUMECA USA, San Francisco, California), Ravi Srinivasan (Seattle Technology Center, Bellevue, Washington), Alain Demeulenaere NUMECA USA, San Francisco, California

Abstract

Adapting legacy applications for use in a modern heterogeneous environment is a serious challenge for an industrial software vendor (ISV). The adaptation of the NUMECA FINE/Turbo computational fluid dynamics (CFD) solver for accelerated CPU/GPU execution is presented. An incremental instrumentation with OpenACC directives has been used to obtain a global solver acceleration greater than 2X on the OLCF Titan supercomputer. The implementation principals and procedures presented in this paper constitute one successful path towards obtaining meaningful heterogeneous performance with a legacy application. The presented approach minimizes risk and developer-hour cost, making it particularly attractive for ISVs.

The authors were awarded with a Quadro M6000, 12GB.

2014

Achieving portability and performance through OpenACC

Authors: J. A. Herdman, W. P. Gaudin, O. Perks (High Performance Computing, AWE plc, Aldermaston, UK), D. A. Beckingsale, A. C. Mallinson, S. A. Jarvis (University of Warwick, UK)

Abstract

OpenACC is a directive-based programming model designed to allow easy access to emerging advanced architecture systems for existing production codes based on Fortran, C and C++. It also provides an approach to coding contemporary technologies without the need to learn complex vendor-specific languages, or understand the hardware at the deepest level. Portability and performance are the key features of this programming model, which are essential to productivity in real scientific applications.

OpenACC support is provided by a number of vendors and is defined by an open standard. However the standard is relatively new, and the implementations are relatively immature. This paper experimentally evaluates the currently available compilers by assessing two approaches to the OpenACC programming model: the “parallel” and “kernels” constructs. The implementation of both of these construct is compared, for each vendor, showing performance differences of up to 84%. Additionally, we observe performance differences of up to 13% between the best vendor implementations. OpenACC features which appear to cause performance issues in certain compilers are identified and linked to differing default vector length clauses between vendors. These studies are carried out over a range of hardware including GPU, APU, Xeon and Xeon Phi based architectures. Finally, OpenACC performance, and productivity, are compared against the alternative native programming approaches on each targeted platform, including CUDA, OpenCL, OpenMP 4.0 and Intel Offload, in addition to MPI and OpenMP.

The authors were awarded with a QUADRO P5000.